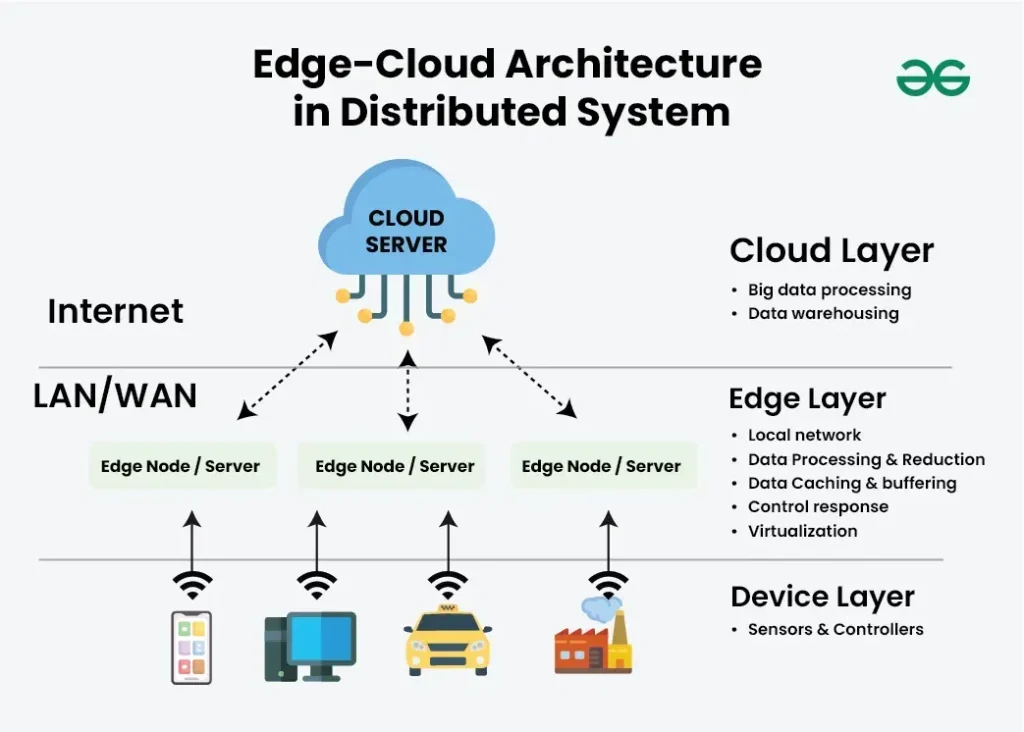

Cloud to Edge architectures are reshaping how businesses deploy software, blending edge computing trends with cloud to edge integration to deliver edge devices and cloud services at scale. From data centers in the cloud to sensors and devices at the edge, this continuum balances scalability with ultra-low latency. By moving initial processing to edge nodes, applications can respond in real time while the cloud handles heavy analytics. This approach supports data sovereignty, reduces bandwidth costs, and complements hybrid cloud architecture and distributed systems architecture. For developers and IT leaders, mastering these patterns is essential to delivering secure, observable operations across the edge and cloud.

Another way to describe this shift is edge-to-cloud integration, where computing stretches from centralized data centers toward edge resources closer to data sources. Edge-enabled platforms perform immediate processing at the device or gateway level, while the cloud supplies orchestration, analytics, and long-term storage. This hybrid, distributed approach mirrors the broader patterns of edge computing trends and distributed systems architecture, balancing latency reduction with scalable insights. For practitioners, adopting near-edge processing and secure, policy-driven synchronization between layers helps unify governance and resilience.

Cloud to Edge architectures: Bridging Cloud and Edge for Real-Time Operations

Cloud to Edge architectures describe a continuum that blends cloud data centers with edge compute close to data sources. This approach moves processing closer to where data is generated, enabling faster response times, real-time analytics, and more resilient systems. By distributing compute across the edge and the cloud, organizations can react quickly to local conditions while preserving centralized governance and scale.

In practice, this pattern leverages edge devices and cloud services in a cohesive fabric, aligning with current edge computing trends. It also fits within hybrid cloud architecture paradigms, where data sovereignty, latency, and bandwidth considerations drive decisions about where processing occurs. The result is a distributed system that balances local intelligence with global orchestration, delivering improved user experiences and operational resilience.

Core patterns enabling edge-first and distributed processing

A core set of patterns underpins Cloud to Edge architectures. Centralized cloud with edge nodes places data collection and preliminary processing at the edge, while the cloud handles advanced analytics, model training, and global orchestration. This preserves cloud scalability and reduces latency for end users or devices at the edge.

Edge-first and edge-native services deploy workloads near data sources, enabling autonomous operation with periodic synchronization to the cloud. Hybrid cloud with data tiering keeps hot, time-sensitive data close to users while archiving older information in the cloud, and event-driven architectures enable publish/subscribe data flows and real-time streaming between edge and cloud components.

Edge devices and cloud services: a practical collaboration in a distributed systems architecture

Edge devices and cloud services collaborate through lightweight runtimes, containers, and secure communication, forming a distributed systems architecture that spans the device, gateway, and cloud layers. This collaboration enables localized decision-making at the edge while preserving the power of centralized analytics and policy enforcement in the cloud.

Orchestration and lifecycle management extend from the cloud to the edge, coordinating deployments, updates, and fault recovery. A robust edge-to-cloud strategy provides consistent data models, secure data exchange, and unified governance, ensuring that edge services remain aligned with global policies and enterprise requirements.

Latency, data sovereignty, and data flow in hybrid environments

Latency and bandwidth considerations drive many Cloud to Edge decisions. Processing at the edge reduces round-trip times for time-sensitive applications such as industrial automation, remote monitoring, and augmented reality, while the cloud handles heavier analytics and long-term storage.

Data sovereignty and privacy concerns often require keeping sensitive data near its source. Data flow models in hybrid cloud architectures balance local processing with cloud-based analytics, using data tiering, policy-driven routing, and eventual consistency to synchronize states where appropriate without compromising regulatory compliance.

Security, governance, and observability in cloud-to-edge integration

Security by design is essential in cloud-to-edge integration. Zero-trust access, encryption in transit and at rest, hardware-backed trust, and regular patching for edge devices help protect data as it traverses the edge-to-cloud path.

Observability and governance span both edge and cloud layers. Unified telemetry, logging, tracing, and policy enforcement enable reliable operations in distributed systems architecture, support incident response, and help validate compliance across the edge and cloud environments.

Design, deployment, and operational best practices for Cloud to Edge architectures

Begin with clear business outcomes and measurable KPIs for latency, uptime, and data utility. Map data flows end-to-end to delineate edge versus cloud processing, and prefer open standards and lightweight protocols to reduce vendor lock-in.

Invest in automation, testing, and simulation to model edge connectivity and failure scenarios. Build for observability from day one, plan for scale with stateless edge services when possible, and extend CI/CD pipelines to the edge to enable secure, rolling deployments and rapid iteration across the edge-to-cloud continuum.

Frequently Asked Questions

What are Cloud to Edge architectures and why do they matter in distributed systems architecture?

Cloud to Edge architectures describe a continuum that distributes processing between centralized cloud data centers and edge resources, enabling low latency and local decision‑making in distributed systems architecture. They blend the cloud’s scalability with edge computing trends, empowering edge devices and gateways to perform initial processing while the cloud handles analytics, orchestration, and governance. This approach improves performance, resilience, and data sovereignty across industries.

How does cloud to edge integration help reduce latency and optimize bandwidth in edge computing trends?

Cloud to edge integration moves time‑sensitive processing to edge nodes, reducing round‑trip time for real‑time applications and industrial controls. The cloud handles heavier analytics and long‑term storage, while the edge computes and filters data locally, aligning with edge computing trends and minimizing unnecessary data transfer to the cloud.

What is hybrid cloud architecture in the context of Cloud to Edge architectures?

Hybrid cloud architecture combines on‑premises or edge resources with public cloud services to form a cohesive environment. In Cloud to Edge architectures, data tiering and policy across edge and cloud enable hot data near the source while leveraging the cloud for long‑term storage and complex analytics.

What are core patterns in Cloud to Edge architectures for edge devices and cloud services?

Key patterns include centralized cloud with edge nodes, edge‑first and edge‑native services, hybrid cloud data tiering, event‑driven and streaming architectures, and federated governance. These patterns help distribute compute and data across edge devices and cloud services for optimal latency, resilience, and manageability.

What security, governance, and compliance considerations apply to Cloud to Edge architectures?

Security should follow zero‑trust principles with encryption in transit and at rest, strong identity management, and regular patching for edge devices and cloud services. Governance must be distributed yet coherent, with consistent data ownership, access control, policy enforcement, and compliance reporting across both edge and cloud environments.

What best practices help implement Cloud to Edge architectures effectively?

Begin with clear business outcomes and end‑to‑end data flows. Use open standards and lightweight protocols to avoid vendor lock‑in, and invest in automated testing, simulations, and observability across edge and cloud. Treat edge deployment as an extension of the cloud CI/CD pipeline, ensuring security, governance, and monitoring are baked in across the edge devices and cloud services.

| Topic | Key Points |

|---|---|

| What are Cloud to Edge architectures? | A continuum distributing work between centralized cloud data centers and edge resources near data sources; blends cloud scalability and global reach with edge low latency, local processing, and data sovereignty. Edge nodes perform initial filtering, real-time inference, or control tasks; the cloud handles heavy analytics, long-term storage, global orchestration, and policy management. |

| Why they matter | Latency, bandwidth, and interoperability are the main drivers. Edge computing reduces round-trip time for time-critical apps; helps manage bandwidth by filtering/aggregating data locally and sending only meaningful information to the cloud. It also supports data residency requirements and enables localized decision-making; when designed well, Cloud to Edge architectures can lower operating costs, improve reliability, and unlock new capabilities. |

| Centralized cloud with edge nodes | Edge devices or gateways collect data and perform preliminary processing before sending summarized information to the cloud. The cloud handles advanced analytics, model training, and centralized orchestration, preserving cloud scalability while reducing latency and increasing edge resilience. |

| Edge-first and edge-native services | Workloads run primarily on edge resources, with edge-native microservices or serverless functions deployed near devices. The edge layer can operate autonomously and only sync with the cloud for updates or policy changes. |

| Hybrid cloud with data tiering | Cloud handles long-term storage and heavy analytics, while critical, time-sensitive data stays near the edge. Data tiering keeps hot data near users and archives cold data in the cloud; requires consistent data models across layers for seamless movement. |

| Event-driven and streaming architectures | Publish/subscribe patterns and data streaming are central. Edge devices emit events flowing to the cloud for analysis; cloud-originated commands propagate to edge nodes; this enables responsive, scalable systems. |

| Federated and distributed governance | Governance is distributed across cloud and edge; policies, security baselines, and compliance controls must be consistent, with clear data ownership, access, and lifecycle boundaries. |

| Key technologies and components | Edge computing hardware and gateways; Containers and lightweight virtualization; Orchestration and edge runtimes; APIs, microservices, and serverless at the edge; Messaging protocols and data streaming (e.g., MQTT, lightweight REST/gRPC); AI/ML at the edge; Data management and security; Observability and telemetry. |

| Design patterns and architectural considerations | Latency vs. accuracy trade-offs; Data sovereignty and privacy; Data consistency and synchronization; Resilience and fault tolerance; Observability across layers; Security by design; DevOps alignment with edge deployments. |

| Industry use cases | Manufacturing/IIoT for real-time monitoring and local anomaly detection; Smart cities for local edge processing with cloud analytics; Healthcare for on-site monitoring with secure cloud storage and analytics; Retail and logistics for edge visibility and cloud-enabled optimization; Autonomous systems for edge-based decisions with cloud updates. |

| Security, governance, and compliance | Zero-trust access and continuous verification; encryption in transit and at rest with cross-environment key management; regular edge software updates and vulnerability management; deterministic policy enforcement across cloud and edge; compliance-by-design with data lineage and audit trails. |

| Best practices | Start with clear business outcomes and KPIs; Map data flows end-to-end; Use open standards and lightweight protocols; Invest in automated testing and simulation; Build for observability from day one; Plan for scale with stateless edge services and orchestration; Prioritize security with hardware-backed trust and secure boot. |

| Future trends | AI at the edge enabling domain-specific inference; 5G and beyond expanding reach; Open standards reducing integration friction; Intelligent edge platforms for automated optimization; Sustainable design focusing on energy-efficient edge compute and smarter data routing. |

Summary

Conclusion: Cloud to Edge architectures describe a practical and increasingly essential approach to building responsive, scalable, and secure technology platforms. By blending edge computing trends with cloud capabilities, organizations can unlock real-time insights, reduce data movement costs, and meet regulatory and operational demands across industries. The path to success lies in choosing the right patterns, adopting robust security and governance, and investing in observability and automation across the edge-to-cloud continuum. As technology evolves, a disciplined, data-driven approach to distributed architectures will help teams deliver innovative solutions that perform when it matters most—at the edge, in the cloud, and everywhere in between.