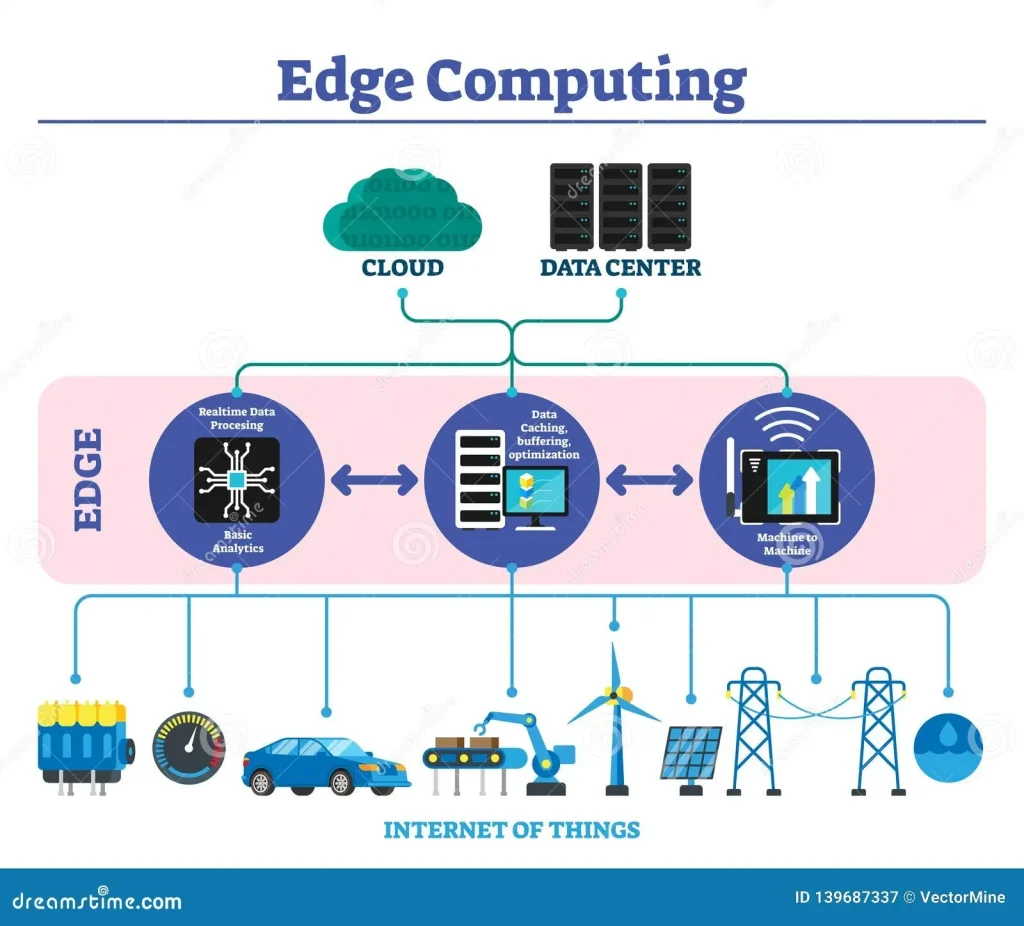

Edge Computing is redefining how organizations collect, process, and act on data by moving computation closer to where it’s generated. From sensors and devices to localized gateways, this approach unlocks edge computing benefits such as faster responses, tighter security controls, and greater scalability. By design, it enables low-latency edge processing that analyzes data near the source and only sends relevant results onward. A robust strategy also depends on edge security best practices, ensuring encrypted data, secure identities, and continuous monitoring at the network edge. Together, a scalable edge infrastructure and the power of edge analytics and AI empower real-time decision making across industries.

Put simply, this is near-data processing at the network edge, also described as edge-native computing or distributed computing at the periphery, where data is analyzed where it is born. This framing emphasizes locality, reduced backhaul, and immediate insights, leveraging a mix of local devices, gateways, and micro data centers. Viewed through an LSI lens, terms such as fog computing, local inference, and on-site analytics map to the same core idea of bringing computation closer to the source. By aligning these concepts with practical architectures—edge nodes, micro data centers, and on-device intelligence—organizations can plan resilient, scalable deployments.

Edge Computing Benefits for Speed, Security, and Scale

Edge computing benefits organizations by moving compute closer to where data is generated, enabling faster responses and more predictable performance. With low-latency edge processing, data can be filtered, analyzed, and acted upon near the source, reducing round-trip times and easing bandwidth pressure on centralized data centers. This proximity is especially valuable for time-sensitive use cases in manufacturing, AR/VR, and remote monitoring, where milliseconds matter and the business can respond in real time. By distributing workloads across edge nodes, you can tailor resources to specific site needs while maintaining a cohesive overall strategy for speed, security, and scale.

Beyond speed, edge computing benefits include resilience and data governance advantages. Local decision-making means critical actions can continue even if connectivity to the cloud is intermittent, and only relevant insights are sent upstream, lowering cloud ingestion costs. When paired with scalable edge infrastructure, organizations can grow capacity incrementally—adding more edge nodes as demand grows and aligning hardware, software, and networks to a unified edge strategy. In practice, this blended approach supports safer, more efficient operations across distributed environments.

Harnessing Low-Latency Edge Processing for Real-Time Applications

Low-latency edge processing enables real-time analytics and control at the edge, dramatically shortening the data journey from sensor to action. By performing filtering, aggregation, and inference locally, applications can respond within micro-to-millisecond windows, delivering faster safety responses, smoother user experiences, and more agile operational insights. This approach is essential for industrial automation, real-time monitoring, and immersive experiences where every millisecond counts and the cost of delay is measured in efficiency and safety.

Organizations can design edge pipelines that prioritize data locality, sending only the most relevant results to centralized services when appropriate. This strategy reduces bandwidth use and cloud egress while preserving the ability to extend insights across sites via centralized models or federated analytics. Embracing low-latency edge processing also supports proactive maintenance, anomaly detection, and real-time decision-making that keeps systems responsive under varying network conditions.

Edge Security Best Practices for Distributed Environments

Security at the edge requires a defense-in-depth approach that addresses both devices and software running at distributed sites. Employ layered protections such as secure device identities, encryption at rest and in transit, minimal and tightly scoped edge software, and continuous anomaly monitoring. Hardware-enforced security features, secure boot, and tamper-evident mechanisms help ensure that edge nodes remain trustworthy even in less controlled environments. A holistic strategy also covers secure software supply chains, regular patching, and governance that tracks what runs where and who can access each component.

Integrating edge security with cloud security creates a coherent hybrid posture that protects data from the device to the cloud and back. This involves secure orchestration, role-based access controls, and monitoring across the entire path—edge, gateway, regional node, and cloud. By following edge security best practices, organizations can reduce data leakage risk, maintain regulatory compliance, and improve incident response times when threats are detected at the edge.

Scalable Edge Infrastructure: Designing for Growth Across Locations

A scalable edge infrastructure must accommodate a diverse set of devices, data formats, and workloads while delivering predictable performance. This requires modular hardware, software-defined networking, and flexible orchestration that can provision resources where they are needed. A typical layered architecture places edge devices at the edge, edge gateways for initial processing, regional edge nodes for aggregation and analytics, and the cloud for long-term storage and cross-site coordination.

Standards-based interfaces, containerization, and portable deployment models help ensure consistency and ease management across locations. Scalable edge infrastructure enables horizontal growth by adding edge nodes as demand grows and refreshing hardware to meet evolving workloads. When combined with edge analytics and AI, this approach unlocks near-data processing, real-time predictions, and localized decision-making while aiming to reduce cloud egress costs and protect user privacy by keeping sensitive data closer to the source.

Edge Analytics and AI: Real-Time Insights at the Source

Edge analytics and AI bring advanced data science capabilities to the data source, enabling real-time insight without always routing data to centralized services. Running models near the data source reduces latency and creates faster, more autonomous decision-making loops. This approach supports predictive maintenance, fault detection, and personalized experiences by allowing inference, scoring, and pattern recognition to occur locally on edge nodes.

By bringing edge analytics into production, organizations can continuously refine models with local context while preserving privacy and lowering bandwidth requirements. The resulting insights can be streamed to the cloud for longer-term training and cross-site coordination, or used to trigger immediate actions at the edge. This combination of on-site AI and centralized optimization enables smarter, faster, and more resilient operations across manufacturing, transportation, and smart-city initiatives.

Practical Deployment Strategies: From Edge Devices to Cloud Orchestration

Practical deployment starts with a clear data strategy that defines what should be processed at the edge versus what should be sent to the cloud. Consider governance, privacy, and compliance early, and choose compute models that align with workloads—whether GPU-accelerated edge nodes for AI inferencing or lightweight CPUs for event processing. Platforms should offer robust remote management, interoperability with existing IT investments, and security features built into the stack to simplify rollout across sites.

An effective rollout follows a phased plan that prioritizes high-impact use cases, enabling measurable Edge Computing benefits early and often. Plan for lifecycle management, including software updates, patch management, and failure recovery, while establishing meaningful metrics such as latency distributions, data transfer volumes, incident response times, and uptime. Pair edge deployments with governance, testing, and continuous improvement to balance performance, cost, and risk as your distributed architecture scales from edge devices to regional nodes and cloud services.

Frequently Asked Questions

What is Edge Computing and how does low-latency edge processing improve application performance?

Edge Computing moves compute closer to data sources—such as sensors, devices, and gateways—enabling low-latency edge processing that filters, analyzes, and acts locally. This reduces round-trip times, enhances real-time responsiveness for use cases like industrial automation and AR, and lowers bandwidth usage by sending only relevant insights to the cloud. These edge computing benefits include faster decisions, tighter security, and more scalable operations.

How do edge computing benefits apply to industrial automation and real-time decision making?

The core edge computing benefits include local data processing, reduced latency, and minimized cloud data transfer. In industrial automation, edge processing enables immediate control actions and predictive maintenance, while real-time decisions are supported by nearby analytics and AI running at the edge.

What are the core edge security best practices to protect data at the edge?

Adopt a layered edge security strategy: establish secure device identities; encrypt data at rest and in transit; run minimal, well-scoped software on edge nodes; monitor for anomalies continuously. Use hardware-enforced security features, secure boot, and tamper-evident mechanisms; maintain a secure software supply chain; apply regular patches and maintain governance that tracks what runs where and who can access each component. Integrating edge security with cloud security creates a hybrid posture that protects data from device to cloud.

How does scalable edge infrastructure enable growth across sites and workloads?

A scalable edge infrastructure supports diverse devices, data formats, and workloads while preserving predictable performance. It relies on modular hardware, software-defined networking, and flexible orchestration to provision resources where needed. A typical layered architecture includes edge devices, edge gateways, regional edge nodes, and cloud services, enabling horizontal scaling of edge nodes and easier management through standards-based interfaces and containerization. Edge analytics and AI capabilities run near the data source for real-time predictions and localized decisions, reducing cloud egress and improving privacy.

What role do edge analytics and AI play in delivering real-time insights at or near the data source?

Edge analytics and AI enable models to run close to data sources, delivering real-time predictions, anomaly detection, and localized decision-making without routing all data to the central cloud. This improves latency, privacy, and resilience, while lowering bandwidth costs and enabling faster, context-aware actions.

What practical steps should an organization take to design, deploy, and operate edge-enabled systems to realize edge computing benefits while balancing performance, cost, and risk?

Start with a clear data strategy that defines what should be processed at the edge versus in the cloud, addressing governance, privacy, and compliance. Choose an edge computing platform that fits your workloads and supports remote management and strong security. Build a phased deployment plan prioritizing high-impact use cases to demonstrate edge computing benefits quickly. Use modular hardware, containerization, and interoperable interfaces to ease scalability, and measure latency, data transfer, uptime, and business value to guide ongoing optimization.

| Aspect | Key Point |

|---|---|

| Definition | Edge Computing moves computation closer to data sources (sensors, devices, gateways) to improve response times, security, and scalability, shifting from cloud-only architectures to a distributed model. |

| Speed & Latency | Distributes compute to the edge to enable low-latency processing, allowing data to be filtered, analyzed, and acted on locally before sending results to the cloud. |

| Security | Requires layered security: secure identities, encryption, minimal software on edge nodes, continuous monitoring, secure boot, tamper-evident hardware, and governance of software supply chains. |

| Scalability & Architecture | Modular hardware, software-defined networking, and flexible orchestration; a layered approach with edge devices, edge gateways, regional nodes, and cloud for storage/coordination; standard interfaces and containerization enable portability. |

| Use Cases | Manufacturing, connected vehicles, smart cities, healthcare, energy, and other domains where timing, reliability, and data sovereignty matter. |

| Practical Considerations | Data strategy for what processes at the edge vs cloud; governance, privacy, and compliance; platform compatibility, remote management, and security features; phased deployment and measurable metrics. |

| Edge Analytics & AI | Models run near data sources for real-time predictions and local decisions, reducing cloud egress and improving privacy. |

| Performance Trade-offs | Balance latency, cost, and risk by careful hardware/software selection and deployment planning. |

Summary

Conclusion: Edge Computing represents a paradigm shift in how modern technology processes data, delivering faster responses, stronger security, and scalable architectures that meet growing demands. By emphasizing speed through low-latency edge processing, enacting comprehensive edge security best practices, and building scalable edge infrastructure, organizations can unlock new levels of operational efficiency and customer value. As edge analytics and AI continue to mature, the ability to derive real-time insights at or near the source will become increasingly essential for competitive advantage. Whether you’re optimizing a factory floor, supporting smart city initiatives, or enabling seamless remote operations, Edge Computing offers a proven framework for transforming data into timely, trustworthy actions. Embrace the edge to accelerate decision-making, reduce risk, and scale your digital initiatives in a rapidly evolving technology landscape.